Neural Networks

And how I struggle with them

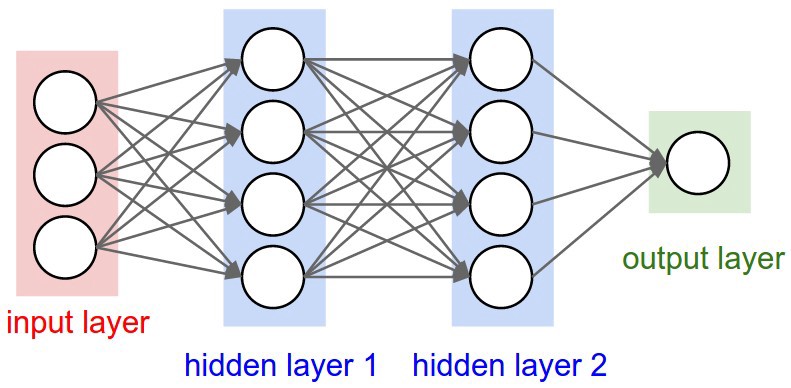

I’m sure that by now everyone has heard about neural networks, deep learning, AI, machine learning and any of the other 304875 new hot things that everyone should do and use in their projects/work/house/etc. After spending the better part of the last month or so working my way through courseworks surrounding neural networks, I came away with a few thoughts for myself (future me hi!). Image taken from this article.

For starters, let me preface this by saying that I don’t know much about neural networks. I think that this applies to most people who end up posting about their sigmoid activation function on Medium or reddit or AiStackExchange. I am not trying to imply that my knowledge is vastly superior or that I place myself on some high horse of absolute knowledge, this is more how I “feel”.

I am using quotes around feel because that’s something that I’ve been hearing quite a lot. Well, not that exactly, but something similar - intuition. “This will build your intuition”/ “This is to get your intuition going”/ “As you might intuit” and the list goes on.

A number of articles try to get you to write your own neural networks and get your intuition going and helping you to get a feel for it, but I think that in this field especially, we shouldn’t rely on the feel of things. On top of that, a lot of these articles or courses try to get you to “build something” and although I agree with building something in all stages to get your knowledge going, I think this isn’t necessarily the same for neural networks, especially when there are huge steps taken OVER elements that are quite important.

You might notice that most articles will skip over the math that is required to understand some of the things you’re going to be using. Although some of them mention that you should have some knowledge, they don’t go into too much detail and I believe this is quite important, especially in order to understand strange behavior when looking at the output of your data. Should you increase the learning rate? How will this impact the back propagation, the calculation of the deltas, how will this impact those over 1 epoch, 100? How about 10000 epochs?

Another big gripe I have is that very few of these articles concern themselves with saying WHY you should use one activation function over another. Why would you use Tanh or Sigmoid or LReLU or RReLU and what’s the difference between them. If your neural networks error is dropping with all of them, does it really matter? If yes, why and if no, why again? I think this is a trend that has been spreading quite rapidly over the past few years, we’re we are ready and hunt for surface level knowledge. We end up with having “aproximate knowledge of many things”.

I will admit that some people might just want to have a play with this. Maybe they are not THAT interested and they just want to get an idea of what happens when you give your PC some numbers and tell it to give you some other numbers. If that’s the case, then those articles are all fine! If that’s not the case, than what a lot of these articles do is perpetuate the idea that this is easy, anyone could do it and everyone should. And while I agree with the latter part, I don’t think it’s easy. Far from it! But it seems that everyone is more interested in keeping up with the trends as opposed to dedicate time and attention to build a solid foundation on the subject.

Another big issue I have is the over reliance on a lot of very powerful frameworks for machine learning, with little understanding of what is beyond that surface level. Tensorflow, Keras, PyTorch are just some of the frameworks that have a lot of “quick” tutorials on how to get up and running with your own cat picture recognition neural network. And again, while that is fine if we’re just playing and experimenting, a lot of these articles or courses make claims about how much you will learn and how this will immediately benefit your job/school/etc.

So what should you do? Well, it’s difficult for me to say, seeing as I don’t know left from right yet. I know what I will do once I’m done with school and have some more time. I will go back and hit the books and brush up on my math knowledge - knowing how to put together the derivative for a function is quite important and although you can find derivatives for most activation functions out there, finding them and understanding them (why is that the derivative? what is a derivative? why are you using it?) is a different story altogether. Once I finish that aspect, I will look into getting more comfortable with using Python as it seems to be the language to use and lastly, I will attempt to build my own little project for my own little interest, using frameworks as little as possible. Once that step is done then the next one would focus on using this in a project somewhere else and seeing if using a framework would indeed help me get better performance, get more accurate results or get to the results faster!

While the above might not be for everyone and while I might not do all of those things, I am convinced that in the end, the process itself will be a lot more rewarding the deeper my understanding is. Which in turn might be applicable to you as well :)